This is my OLD website, my new one is here:

I'm leaving this wiki online as an archive of my older and smaller works. Enjoy!

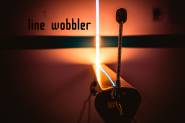

Hi! I'm Robin Baumgarten, a Berlin-based interactive installation artist and experimental hardware game developer, an this is a little website I use to keep track of my experiments. There's a lot of odd hardware controllers in here that I started getting into over the last years, and some older games. My best known game is Line Wobbler, and I'm now working on Wobble Garden.

If you want to know more, please contact me directly! Also, I'm @Robin_B on Twitter, follow me now! ![]()

Follow me for my newest experiments: Follow @Robin_B